How many times can a model fail you before you never return to it?

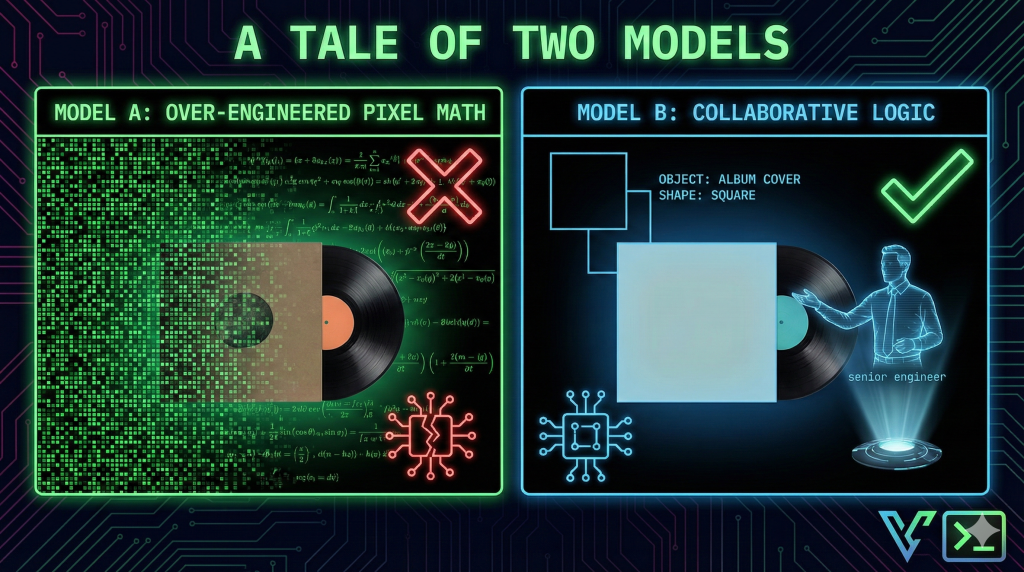

The Mission: I needed a simple computer vision tool for my e-commerce business. The goal was straightforward: look at a photo and tell me if it’s an Album Cover (a large square/rectangle) or a Vinyl Record (a large circle).

To a human, this is obvious. To a machine, it’s geometry. I anticipated a 15-minute build, and I was sadly disappointed because I picked the wrong tool.

The Failure: The ChatGPT Loop My first stop for this task was ChatGPT. I wanted to give it a chance because it has had the most recent update with the 5.2 launch.

I explained the problem. Instead of offering a modern solution using a vision library or a high-level abstraction, it dragged me into the weeds. It started generating incredibly complex code that tried to analyze the images pixel by pixel, using heavy mathematical formulas to detect shapes manually.

public static class PhotoTagger

{

public static PhotoTag Classify(Stream inputStream)

{

try

{

if (inputStream == null || !inputStream.CanRead)

return PhotoTag.Other;

if (inputStream.CanSeek)

inputStream.Position = 0;

using var original = SKBitmap.Decode(inputStream);

if (original == null || original.Width < 64 || original.Height < 64)

return PhotoTag.Other;

using var bmp = Downscale(original, maxDim: 256);

if (bmp == null)

return PhotoTag.Other;

int w = bmp.Width;

int h = bmp.Height;

float cx = (w - 1) / 2f;

float cy = (h - 1) / 2f;

float minDim = Math.Min(w, h);

// Search radii range where a record edge would exist

float rMin = minDim * 0.25f;

float rMax = minDim * 0.52f;

const int angles = 72;

var radii = new float[angles];

var edges = new float[angles];

int found = 0;

for (int i = 0; i < angles; i++)

{

float a = (float)(i * (2.0 * System.Math.PI / angles));

float dx = (float)System.Math.Cos(a);

float dy = (float)System.Math.Sin(a);

// Step along the ray (1px steps in downscaled image)

float bestEdge = 0f;

float bestR = 0f;

float prevLum = SampleLum(bmp, cx + dx * rMin, cy + dy * rMin);

for (float r = rMin + 1; r <= rMax; r += 1f)

{

float x = cx + dx * r;

float y = cy + dy * r;

if (!InBounds(x, y, w, h))

break;

float lum = SampleLum(bmp, x, y);

float edge = System.Math.Abs(lum - prevLum);

if (edge > bestEdge)

{

bestEdge = edge;

bestR = r;

}

prevLum = lum;

}

// Keep only meaningful edges

if (bestEdge >= 0.06f && bestR > 0f)

{

radii[i] = bestR;

edges[i] = bestEdge;

found++;

}

else

{

radii[i] = -1f;

edges[i] = 0f;

}

}

// Not enough angles found an edge -> probably not a disc

if (found < angles * 0.45f)

return PhotoTag.Cover;

// Compute robust radius estimate using median of found radii

var goodRadii = new System.Collections.Generic.List<float>(found);

var goodEdges = new System.Collections.Generic.List<float>(found);

for (int i = 0; i < angles; i++)

{

if (radii[i] > 0f)

{

goodRadii.Add(radii[i]);

goodEdges.Add(edges[i]);

}

}

goodRadii.Sort();

float medianR = goodRadii[goodRadii.Count / 2];

// Inliers = radii close to median

float tol = 6f; // pixels in downscaled space; disc edge should be tight

int inliers = 0;

double sum = 0;

double sumSq = 0;

double edgeSum = 0;

for (int i = 0; i < angles; i++)

{

if (radii[i] > 0f && System.Math.Abs(radii[i] - medianR) <= tol)

{

inliers++;

sum += radii[i];

sumSq += radii[i] * radii[i];

edgeSum += edges[i];

}

}

float inlierRatio = (float)inliers / angles;

float meanR = inliers > 0 ? (float)(sum / inliers) : 0f;

float varR = inliers > 1 ? (float)((sumSq / inliers) - (meanR * meanR)) : 999f;

float stdR = varR > 0 ? (float)System.Math.Sqrt(varR) : 0f;

float meanEdge = inliers > 0 ? (float)(edgeSum / inliers) : 0f;

// Final “big consistent circle” decision

bool bigCircle = meanR >= minDim * 0.40f; // disc occupies most of frame

bool consistent = stdR <= 4.5f; // tight radius consistency

bool manyAngles = inlierRatio >= 0.60f; // circle-like around most angles

bool strongEdge = meanEdge >= 0.07f; // visible edge

if (bigCircle && consistent && manyAngles && strongEdge)

return PhotoTag.Album;

return PhotoTag.Cover;

}

catch

{

return PhotoTag.Other;

}

}

private static bool InBounds(float x, float y, int w, int h)

=> x >= 0 && x <= (w - 1) && y >= 0 && y <= (h - 1);

private static float SampleLum(SKBitmap bmp, float x, float y)

{

int ix = ClampToInt(x, 0, bmp.Width - 1);

int iy = ClampToInt(y, 0, bmp.Height - 1);

var c = bmp.GetPixel(ix, iy);

float r = c.Red / 255f;

float g = c.Green / 255f;

float b = c.Blue / 255f;

return (0.2126f * r) + (0.7152f * g) + (0.0722f * b);

}

private static int ClampToInt(float v, int min, int max)

{

int i = (int)System.Math.Round(v);

if (i < min) return min;

if (i > max) return max;

return i;

}

private static SKBitmap? Downscale(SKBitmap original, int maxDim)

{

int w = original.Width;

int h = original.Height;

if (w <= maxDim && h <= maxDim)

return original.Copy();

float scale = System.Math.Min((float)maxDim / w, (float)maxDim / h);

int newW = System.Math.Max(1, (int)(w * scale));

int newH = System.Math.Max(1, (int)(h * scale));

return original.Resize(new SKImageInfo(newW, newH), SKFilterQuality.Medium);

}

}

}

It was “smart,” but it was useless. We spent an hour running in circles. The code was brittle, the logic was over-engineered, and I was getting frustrated. It felt like asking a junior developer for a script and getting back a doctoral thesis on geometry.

The Fix: The Gemini Pivot I decided to switch lanes. I pasted the exact same context into Gemini.

The difference was immediate. Gemini didn’t just dump code; it acted like a Senior Engineer. It recognized the problem wasn’t “math”—it was “classification.”

- It solved the problem in a handful of prompts.

- It explained why it chose specific libraries.

- It walked me through its different approaches as it tested them.

It didn’t just output text; it educated me. We weren’t “prompting”; we were collaborating. The “Vibe” was completely different—it felt like a partnership, not a vending machine.

public static class PhotoTagger

{

public static PhotoTag Classify(Stream inputStream)

{

try

{

if (inputStream == null || !inputStream.CanRead) return PhotoTag.Other;

if (inputStream.CanSeek) inputStream.Position = 0;

using var original = SKBitmap.Decode(inputStream);

if (original == null || original.Width < 64 || original.Height < 64)

return PhotoTag.Other;

// 1. Downscale

using var bmp = Downscale(original, 100);

if (bmp == null) return PhotoTag.Other;

// --- DATA GATHERING ---

SKColor bg = GetCornerColor(bmp);

// OUTER RING (75% - Near the edge)

var (outerColor, outerUniformity) = AnalyzeRingUniformity(bmp, 0.75f);

// INNER RING (35% - Close to the label)

var (innerColor, innerUniformity) = AnalyzeRingUniformity(bmp, 0.35f);

// LABEL (10% - Dead center)

SKColor labelColor = GetCenterColor(bmp, 0.10f);

// --- LOGIC CHECKS ---

// 1. UNIFORMITY: Vinyl is solid.

// If the outer ring is busy (text, complex art), it's a cover.

if (outerUniformity < 0.60f) return PhotoTag.Cover;

// 2. BACKGROUND CONTRAST:

// If the "record" looks just like the background, it's a cover (full bleed or white border).

if (GetColorDistance(outerColor, bg) < 35) return PhotoTag.Cover;

// 3. LABEL CONTRAST (The "Red Back Cover" Fix):

// A Record has a label (paper) distinct from the vinyl.

// A Solid Red Cover is Red everywhere.

if (GetColorDistance(outerColor, labelColor) < 25) return PhotoTag.Cover;

// 4. CONCENTRIC CONSISTENCY (The "B&W Brenda Lee" Fix):

// A Record is the same color at the edge (75%) and near the label (35%).

// A Cover with a vignette/border will be Light at 75% and Dark at 35%.

float ringDiff = GetColorDistance(outerColor, innerColor);

// We use a looser threshold (45) because glare often affects the outer ring more than the inner ring.

// But Brenda Lee (White vs Black) will have a huge diff (>100).

if (ringDiff > 45) return PhotoTag.Cover;

// If it passes all checks, it is almost certainly a record.

return PhotoTag.Album;

}

catch

{

return PhotoTag.Other;

}

}

private static (SKColor DominantColor, float Score) AnalyzeRingUniformity(SKBitmap bmp, float radiusPercent)

{

int cx = bmp.Width / 2;

int cy = bmp.Height / 2;

float radius = Math.Min(cx, cy) * radiusPercent;

int samples = 36;

List<SKColor> pixels = new List<SKColor>();

for (int i = 0; i < samples; i++)

{

double angle = i * (2.0 * Math.PI / samples);

int x = cx + (int)(Math.Cos(angle) * radius);

int y = cy + (int)(Math.Sin(angle) * radius);

x = Math.Clamp(x, 0, bmp.Width - 1);

y = Math.Clamp(y, 0, bmp.Height - 1);

pixels.Add(bmp.GetPixel(x, y));

}

// Find Dominant Color

int maxCount = 0;

SKColor bestColor = SKColors.Black;

foreach (var pivot in pixels)

{

int count = 0;

foreach (var other in pixels)

{

if (IsSimilar(pivot, other)) count++;

}

if (count > maxCount)

{

maxCount = count;

bestColor = pivot;

}

}

return (bestColor, (float)maxCount / samples);

}

private static SKColor GetCenterColor(SKBitmap bmp, float radiusPercent)

{

int cx = bmp.Width / 2;

int cy = bmp.Height / 2;

int r = (int)(Math.Min(bmp.Width, bmp.Height) * radiusPercent);

var c1 = bmp.GetPixel(cx, cy);

var c2 = bmp.GetPixel(cx - r/2, cy);

var c3 = bmp.GetPixel(cx + r/2, cy);

var c4 = bmp.GetPixel(cx, cy - r/2);

var c5 = bmp.GetPixel(cx, cy + r/2);

return AverageColors(new[] { c1, c2, c3, c4, c5 });

}

private static SKColor GetCornerColor(SKBitmap bmp)

{

int offset = 2;

var c1 = bmp.GetPixel(offset, offset);

var c2 = bmp.GetPixel(bmp.Width - 1 - offset, offset);

var c3 = bmp.GetPixel(offset, bmp.Height - 1 - offset);

var c4 = bmp.GetPixel(bmp.Width - 1 - offset, bmp.Height - 1 - offset);

return AverageColors(new[] { c1, c2, c3, c4 });

}

private static bool IsSimilar(SKColor c1, SKColor c2)

{

return GetColorDistance(c1, c2) < 45;

}

private static float GetColorDistance(SKColor c1, SKColor c2)

{

float rDiff = c1.Red - c2.Red;

float gDiff = c1.Green - c2.Green;

float bDiff = c1.Blue - c2.Blue;

return (float)Math.Sqrt(rDiff * rDiff + gDiff * gDiff + bDiff * bDiff);

}

private static SKColor AverageColors(SKColor[] colors)

{

long r = 0, g = 0, b = 0;

foreach (var c in colors) { r += c.Red; g += c.Green; b += c.Blue; }

return new SKColor((byte)(r / colors.Length), (byte)(g / colors.Length), (byte)(b / colors.Length));

}

private static SKBitmap Downscale(SKBitmap original, int maxDim)

{

int w = original.Width;

int h = original.Height;

if (w <= maxDim && h <= maxDim) return original.Copy();

float scale = Math.Min((float)maxDim / w, (float)maxDim / h);

return original.Resize(new SKImageInfo((int)(w * scale), (int)(h * scale)), SKFilterQuality.Low);

}The Insight: The “Trust Battery” This experience left me thinking about the future of these tools.

I am a “Power User.” I’m curious. I force myself to test different models, retry failures, and keep up with updates. I have a high tolerance for friction because I know the payoff is there.

But what about the average user?

The average user doesn’t care about “model weights” or “parameters.” They care about the result. If they try a tool like ChatGPT, get 2 hours of failure, and feel stupid, they may never come back to ChatGPT again.

Every time an LLM forces a wrong answer just to be helpful, it drains the user’s trust battery. If these companies aren’t careful, they won’t lose users to competitors; they’ll lose them to apathy.

The Stack:

- Task: Image Classification (Vinyl vs Cover)

- Failed Model: ChatGPT (Over-engineered pixel math)

- Winning Model: Gemini (Collaborative logic)